The Dino 🦕, the Llama 🦙, and the Whale 🐋

This is part 1 of a series on building LLMs with Deno. View the second part here.

I have had a desire for a while to understand what it would take to be able to interact with a locally hosted large language model and with the release of DeepSeek R1, a reasoning model, was enough of a prompt to figure out how to tackle it.

My day job is a Principal Technologist at CTO Labs, where we advise investors, senior executives and boards on technology. A topic that is always of interest is the impacts of emergent technologies on organizations, and at the moment there is no bigger technology topic than AI. One of the ways we keep fresh on those impacts is our own real world use cases.

While I knew Python is the de facto language of data, ML and AI, I was also curious about the state of TypeScript/JavaScript in the space as well, because that is personally a lot more familiar to me. While I am biased, being a former core contributor to Deno, I have always liked the “batteries included” approach to being a development tool.

I was also curious about Jupyter notebooks, all my data scientist colleagues were strong advocates of them, and when I was a core contributor at Deno, I strongly advocated for us to pursue kernel support integrated into Deno, but I never had a personal use case that drove me to experiment with it.

Could my favorite development tool, plus a development environment specifically suited to the task, be an easy way to learn and experiment with AI? Skipping to the end, the answer is yes.

I am going to take you on the journey that I went on…

Getting started

There are a few components to our journey:

- An environment for our language model – while you can connect up to various LLM hosting environments via APIs, we are going to leverage the Ollama framework for running language models on your local machine.

- A large language model – we will use a resized version of DeepSeek R1 that can run locally.

- A notebook – Jupyter Notebook for interactive code and text.

- Deno – a runtime that includes a built-in Jupyter kernel. We assume a recent version is installed.

- An IDE – we’ll use VSCode with built-in Jupyter Notebook support and the Deno extension (extension link).

- An AI library/framework – LangChain.js to simplify interactions with the LLM.

- A schema validator – we’ll structure LLM output. We will use zod for this.

Performance varies based on your CPU/GPU and RAM. Ensure you have enough memory and processing power for local models.

Setting up a local model

Download and install Ollama if you haven’t

already. Confirm the ollama command is available and the Ollama server is

running on port 11434.

Install DeepSeek R1 8b parameter model:

ollama pull deepseek-r1:8bCheck availability with:

ollama listCreating a notebook

- Open VSCode. Create a new folder or path for your notebook.

- Install/update the Deno VSCode extension. Enable it with the Deno: Enable

command from the palette, or create a

deno.json. - Use the

Create: New Jupyter Notebookcommand and select Deno in the Select Kernel menu. If Deno isn’t listed, update or reinstall the Deno extension.

Using the model

LangChain.js provides a consistent interface for interacting with large language models, including Ollama. For example:

import { ChatOllama } from "npm:@langchain/ollama";

const model = new ChatOllama({

model: "deepseek-r1:8b",

});Generating a chain

LangChain.js makes it easier to create modular AI workflows, or “chains.”

import { z } from "npm:zod";

import { RunnableSequence } from "npm:@langchain/core/runnables";

import { StructuredOutputParser } from "npm:@langchain/core/output_parsers";

import { ChatPromptTemplate } from "npm:@langchain/core/prompts";

const zodSchema = z.object({

answer: z.string().describe("answer to the user's question"),

source: z.string().describe(

"source used to answer the user's question, should be a website.",

),

});

const parser = StructuredOutputParser.fromZodSchema(zodSchema);

const chain = RunnableSequence.from([

ChatPromptTemplate.fromTemplate(

"Answer the users question as best as possible.\n{format_instructions}\n{question}",

),

model,

parser,

]);

// Display the format instructions

Deno.jupyter.md`${parser.getFormatInstructions()}`;Example JSON schema

Below is an example of a valid JSON schema for an object requiring a foo

property that is an array of strings:

{

"type": "object",

"properties": {

"foo": {

"description": "a list of test words",

"type": "array",

"items": {

"type": "string"

}

}

},

"required": ["foo"],

"$schema": "http://json-schema.org/draft-07/schema#"

}So the object {"foo": ["bar", "baz"]} matches the schema. The object

{"properties": {"foo": ["bar", "baz"]}} is not valid.

In our chain above, any output is parsed and validated by zod. Your LLM’s JSON

must match the schema exactly.

Here is the JSON schema instance your output must adhere to in this example:

{

"type": "object",

"properties": {

"answer": {

"type": "string",

"description": "answer to the user's question"

},

"source": {

"type": "string",

"description": "source used to answer the user's question, should be a website."

}

},

"required": ["answer", "source"],

"additionalProperties": false,

"$schema": "http://json-schema.org/draft-07/schema#"

}Documenting our process

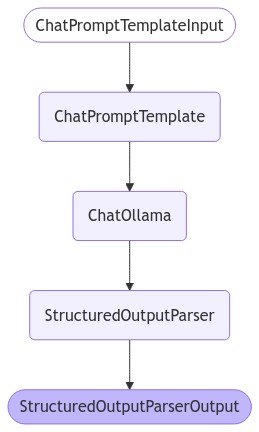

LangChain.js, Deno and Jupyter can document the flow visually:

const image = await chain.getGraph().drawMermaidPng();

const arrayBuffer = await image.arrayBuffer();

Deno.jupyter.image(new Uint8Array(arrayBuffer));Which renders something like:

Asking a question

Try asking a question:

const response = await chain.invoke({

question: "What is a Deno?",

format_instructions: parser.getFormatInstructions(),

});

console.log(response);Sample output:

{

"answer": "Deno is a runtime environment designed for web development...",

"source": "https://deno.dev"

}In the end

I found that Deno and Jupyter provide a fun, productive environment for local AI experiments. Most setup was related to running an LLM locally, which could easily be swapped for an API-based model like OpenAI or Anthropic.

I already knew how low-friction Deno can be, and now I’ve seen the benefits of Jupyter Notebooks for iterative exploration and thorough documentation. It’s become my go-to environment for learning and prototyping.

Using LLMs with Deno? 🐋 🦕 🦙 We want to hear from you!

Let us know on Twitter, Discord, YouTube, BlueSky, or Mastodon.