The State of Edge Functions 2023: The Year of Globally Distributed Apps

Earlier this year, we kicked off the first edition of the State of Edge Functions Survey, where we invited developers to share their experience with and predictions about edge functions. With nearly a thousand responses, we have closed the survey and are excited to present the report. Given that edge functions are a nascent technology, we hope the report provides insight into common use cases, frustrations, and upcoming trends.

Read on for our analysis or view the full results of the survey.

Didn’t participate in this year’s report? Get notified when we open the next survey.

Edge functions as “glue code”

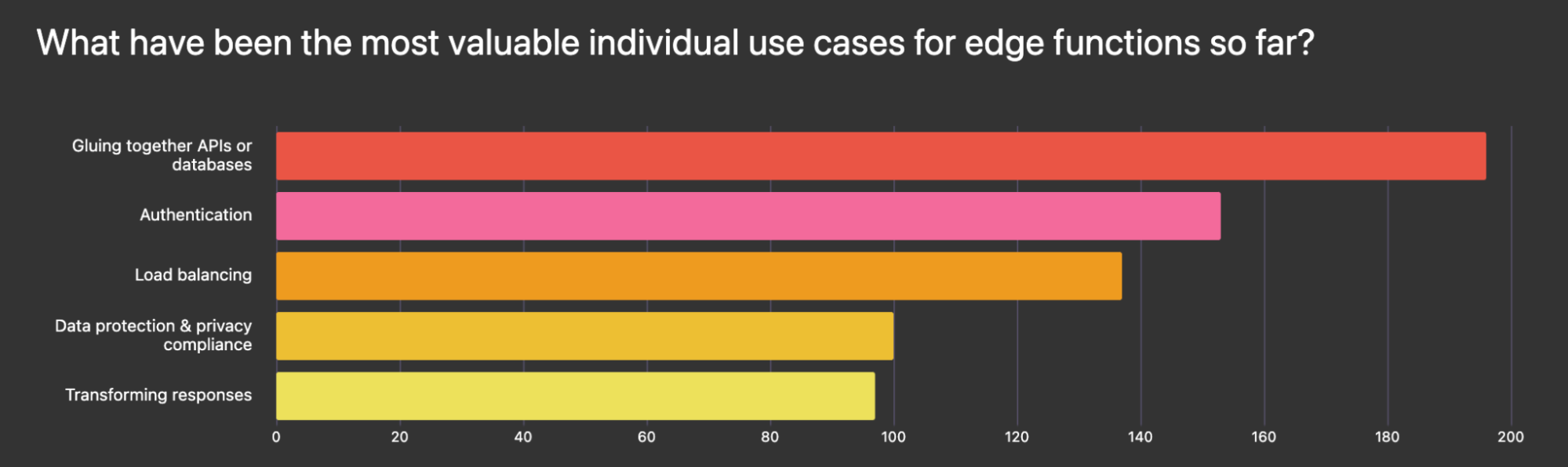

Gluing together APIs and databases was the most common use case, followed closely by authentication and load balancing:

Tying together APIs is a common use case not only for edge functions, but also serverless functions in general, as creating and deploying many single-purpose functions as microservices made it easier for development teams to build and ship quickly.

Authentication is the second mentioned use case for edge functions, which may not have been as common for single origin serverless functions due to edge functions’ minimal latency. Authenticating users, critical for the vast majority of apps, demands high performance for a seamless user experience, which edge functions are uniquely suited to offer.

As the ecosystem around serverless at edge continues to grow, we’ll see more edge-specific, use-case specific, middleware, such as this minimal dependency authentication solution.

More complex edge functions require state

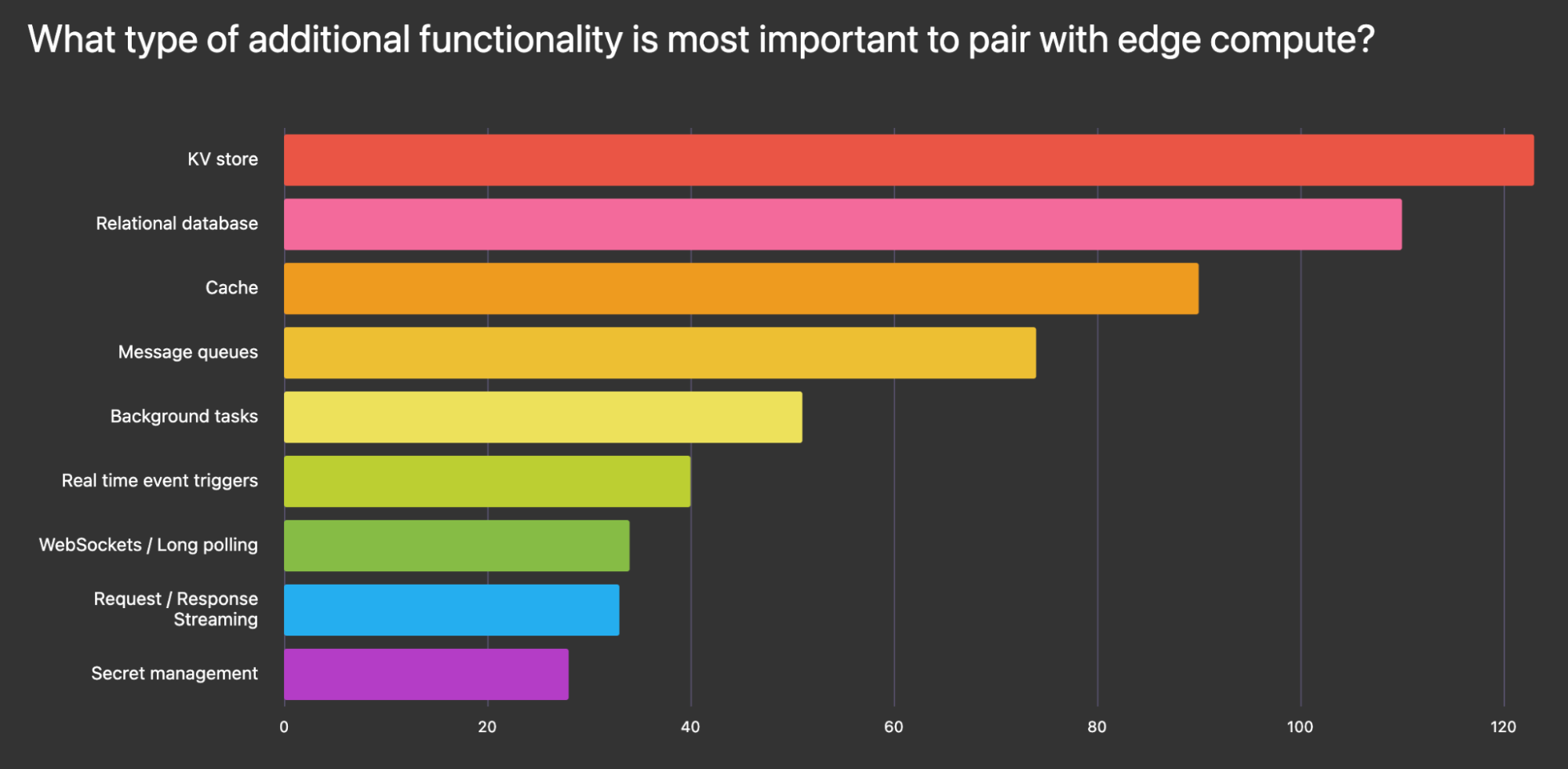

For more complex use cases, state was the most important need:

This makes sense, as persistent data storage unlocks a whole new class of use cases, such as hosting entire web apps at the edge. Specific technologies called out were Workers KV, Workers D1, and PlanetScale.

For quickly building stateful apps at the edge, Deno KV is a globally distributed, zero config KV store that allows you to connect to it with a single line of code and without needing to copy and paste any keys.

Edge functions mean better performance

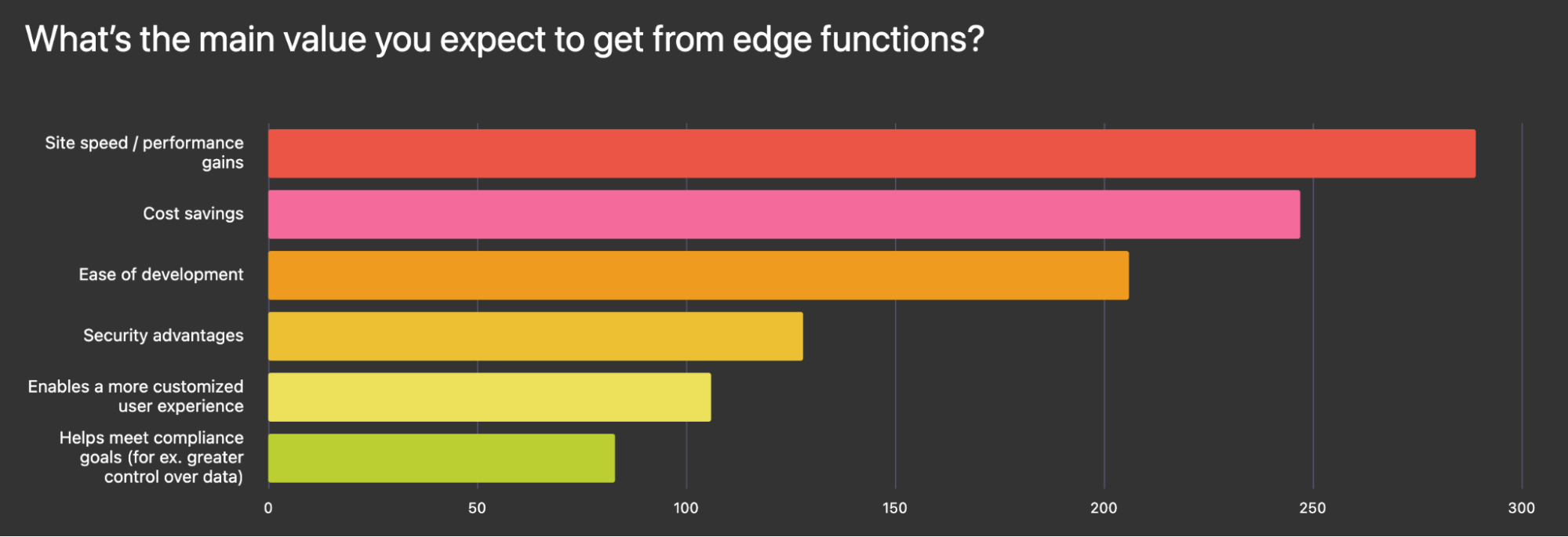

Most developers leaned on edge functions to drive site speed and performance gains, followed closely by cost savings:

Every millisecond of latency counts, especially in e-commerce, gaming, media sites, etc., where real dollars are at stake. It’s understandable that the performance boost from removing network latency by running functions, and more commonly entire web apps, at the edge is a huge value add for developers, their users, and their businesses.

Time to first byte (TTFB) is not only much lower, but with edge-native full-stack web frameworks such as Fresh, where zero JavaScript is sent to the client by default and all pages are server-side rendered, developers can build e-commerce applications that achieve a perfect Pagespeed score and maximize revenue.

Most developers struggle with debugging, testing, and observability

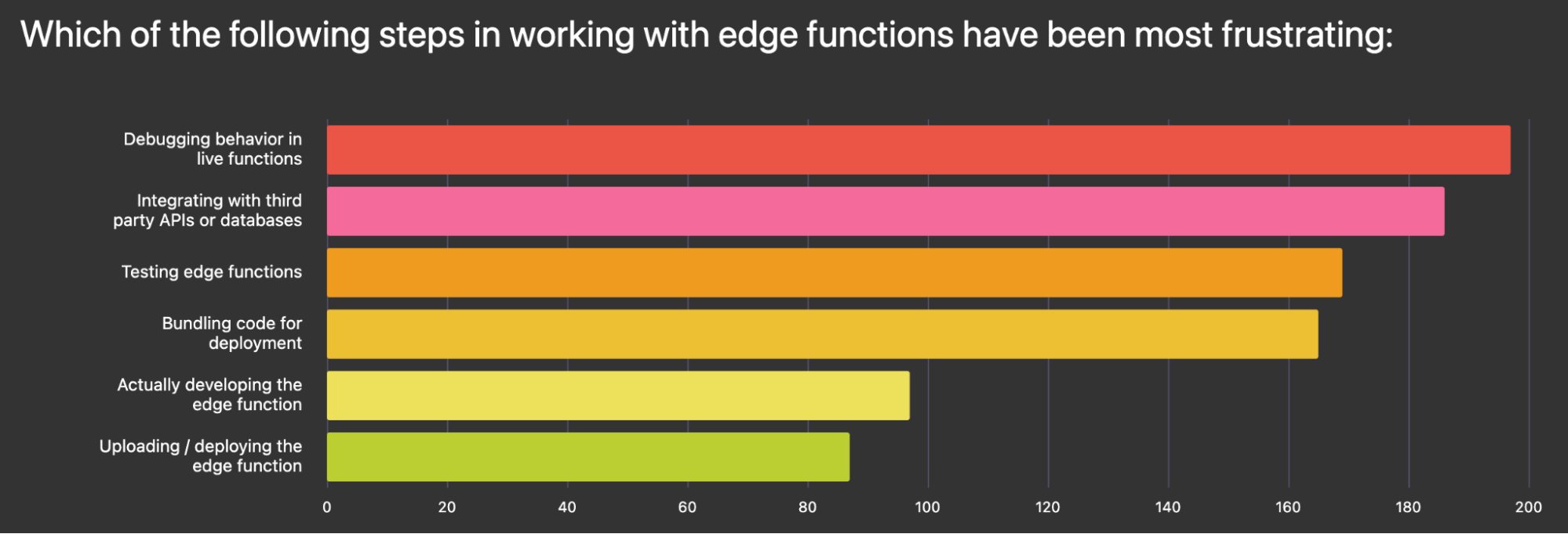

Debugging, testing, and observability were the most commonly cited challenges by developers:

The challenge around troubleshooting edge functions arises in two areas: lack of helpful logging and auxilliary tooling from edge function providers, as well as lack of third party tooling focused on edge functions. Currently, monitoring edge functions fall back to tools intended for servers or virtual private machines, such as New Relic, Spunk, or Sentry.

While testing a single edge function or microservice can be straightforward, acceptance, integration, and e2e testing were difficult given the limitations of current testing frameworks. Creating reliable tests for these situations often required development teams to build custom testing solutions.

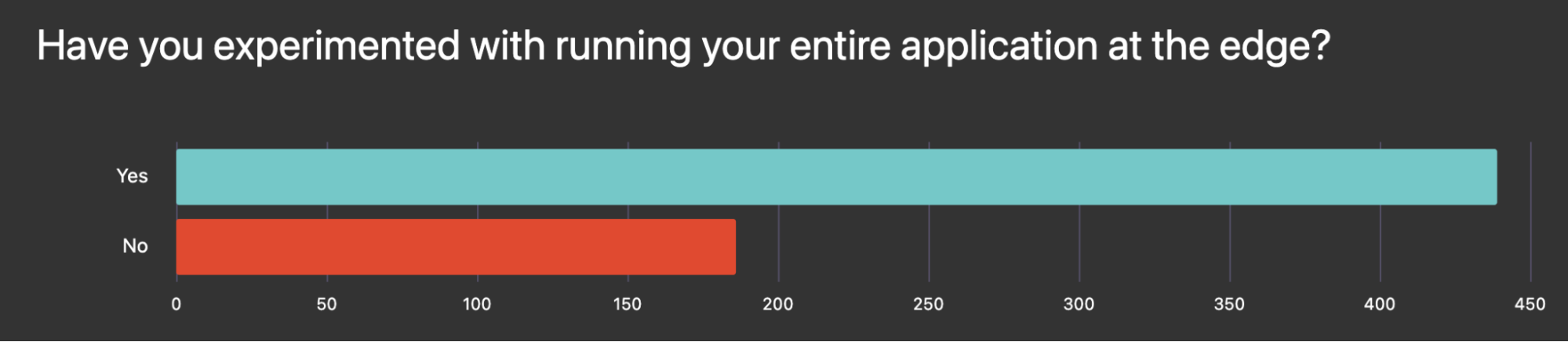

Full-scale apps at the edge are a reality

The majority of surveyed developers have built and deployed entire web apps at the edge:

In addition, more than half of surveyed developers believe that new sites and apps would run fully at the edge within the next 3 years. We agree, since web infrastructure — from machines in basements to serverless in cloud to serverless at edge — historically trended towards improved user performance.

As the tooling and ecosystem around serverless compute at edge matures, use cases will evolve from CDN proxies, API servers, and load balancers to full stack SaaS, e-commerce, web apps, etc. due to improved performance and user experiences.

Though many approaches towards building full-stack apps usually combine static JAMstack with edge functions for dynamism or using an edge-native framework like HonoJS or Fresh, we anticipate more tools for globally distributed full-stack web apps, such as this SaaS template designed built for the edge called SaaSKit.

What’s next

Thank you to all the developers who took the survey and gave back to the serverless community through contributing to the report. If you would like to be notified about next year’s survey and report, please subscribe to the State of Edge Functions newsletter here.

Don’t miss any updates — follow us on Twitter.